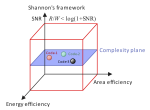

Information theory has driven the information and communication technology industry for over 70 years. Great successes have been achieved in both academia and industry. In theory, polar codes and spatially coupled low-density parity-check (LDPC) codes have achieved the theoretical bound. In practice, capacity-approaching coding schemes such as turbo, polar, ...

One of Claude Shannon’s best remembered “toys” was his maze-solving machine, created by partitions on a rectangular grid. A mechanical mouse was started at one point in the maze with the task of finding cheese at another point. Relays under the board guided successive moves, each of which were taken in the first open counterclockwise direction from the previ...

The entropy function plays a central role in information theory. Constraints on the entropy function in the form of inequalities, viz. entropy inequalities (often conditional on certain Markov conditions imposed by the problem under consideration), are indispensable tools for proving converse coding theorems. In this expository article, we give an overview o...

Student researchers are an indispensable part of the Â鶹´«Ă˝Ół» Information Theory Society (ITSoc). If academic research is analogized with a communication system, then our faculty body constitutes the codebook, and our student body represents the codewords traveling through the medium of time, taking with them our most consequential messages and research outputs...

Textual documents, such as manuscripts and historical newspapers, make up an important part of our cultural heritage. Massive digitization projects have been conducted across the globe for a better preservation of, and for providing easier access to such, often vulnerable, documents. These digital counterparts also allow to unlock the rich information contai...